A performance test execution report summarises the results of a single test execution. These reports are produced after each execution and usually contain varying levels of detail.

A performance test execution report summarises the results of a single test execution. These reports are produced after each execution and usually contain varying levels of detail.

In my 10 years of performance test consulting, I have seen reports that are one-pagers, outputted directly by the tool with very little user interaction. I have also seen reports that go into significant detail and require hours of data manipulation to produce. Personally, I prefer the later.

Its not that I like am a stickler for documentation, its more that I believe that an execution report should tell me all of the interesting parts of a test, from response times to resource utilization, I want to know as much as I can about injecting a particular load level against an application. When I walk in to work in the morning, I want to be able to answer that question “How did last nights test go?”.

While working at a major bank, I had the opportunity of defining the test execution report templates for a major application. I worked closely with the production availability team to create a report template that provided everything that was required to determine if changes could result in performance degradation. The sign-off process involved a pile of execution reports that were analysed top to bottom and signed-off one by one. Needless to say, I learned a lot about which content was vital for these reports, and which could be ommitted.

Unfortunately, despite a very happy production support team, and a nomination by the client for a customer service award, there was one problem. The reports took too long to produce. Few elements of the report could be simply copied out of the customers performance tool (LoadRunner), and many took parsing raw results through excel to get the desired result.

After a successful release, I left the client and moved onto a new challenge. I wanted to find a way to maintain the quality of the reports while reducing the amount of effort required to produce them. I heard that after I left, the client had employed some automation for the excel processing parts of the report. While this had reduced their time, it was still a very hands on process. I knew there must be a better way.

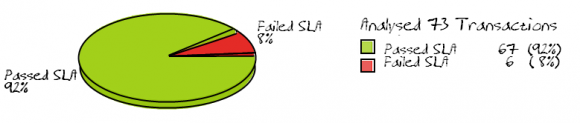

6 months on, and I now recieve 95% of the elements of this original report in my inbox automatically after each test execution. I had reached a point where my team can now spend more time on analysis, and less time on producing graphs. It is now clear within minutes of an execution if there is a major problem or not. At last, I had my cake and was eating it too.

Over the next couple of weeks, I will document the journey from looking at the individual elements of an execution report to having an automated report in my inbox after each test.